The transition from visual novelties to production-grade infrastructure has reached a definitive milestone in January 2026. According to our research, the era of “lucky prompts” is over.

Two years ago, the concept of generating high-fidelity, physically accurate video from a mere text string was considered a laboratory curiosity. Today, based on our evaluations, the landscape of AI Video Generation is the primary engine behind digital storytelling, marketing, and industrial simulation.

Our analysis shows that the friction once inherent in traditional video production—protracted lighting setups, expensive location scouting, and the logistical nightmare of character continuity—is being systematically dismantled by universal generative models that understand the world as a physical space rather than a collection of pixels.

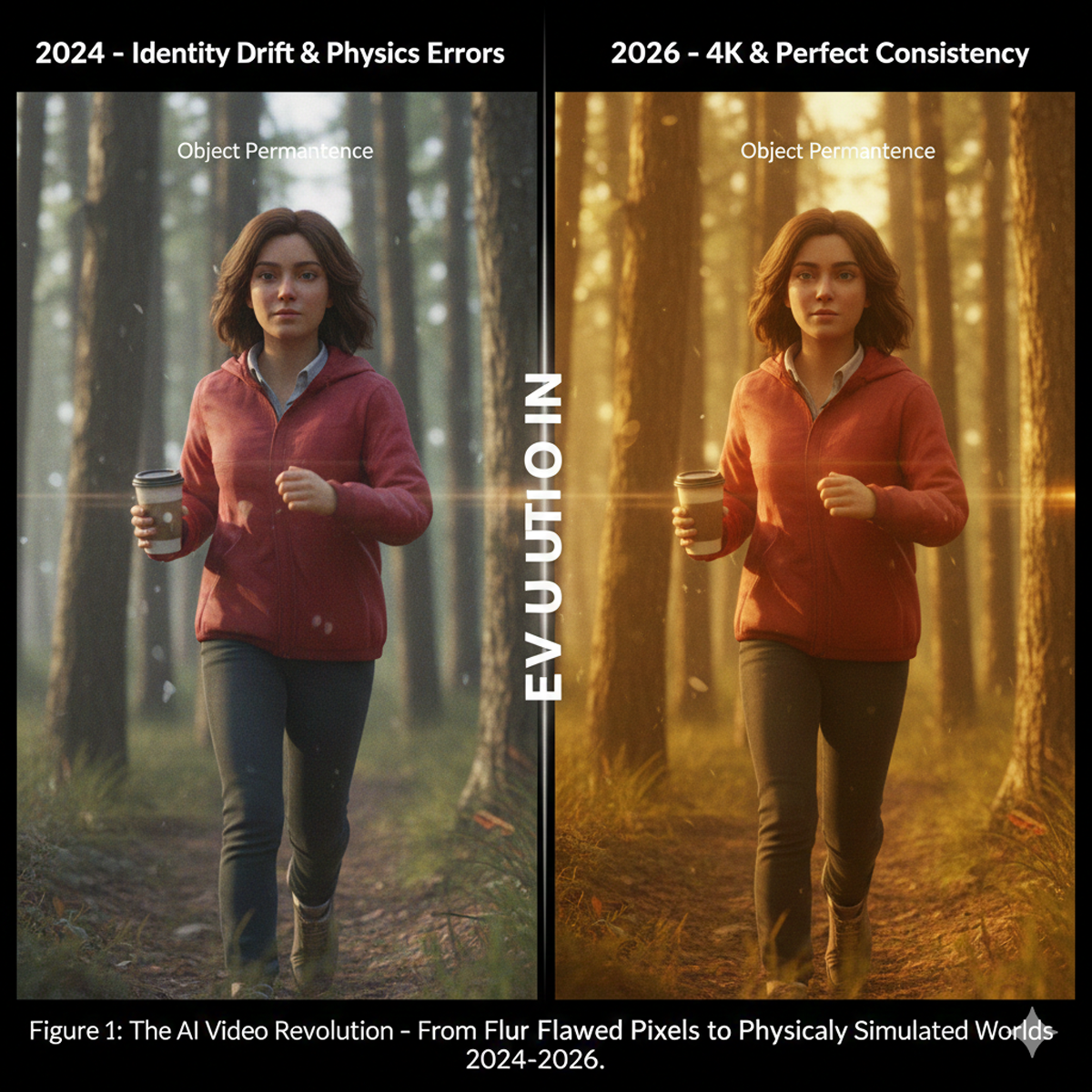

Table 1: Evolution of Video Standards (2024 vs 2026)

| Feature | 2024 Standards | 2026 Standards | Our Analysis |

| Typical Resolution | 720p – 1080p | 1080p – 4K | 4K is now the professional baseline. |

| Max Clip Duration | 4 – 10 Seconds | 20 – 60 Seconds | Long-form stability has been achieved. |

| Physics Accuracy | High Hallucination | Near-Perfect Simulation | Models now understand gravity and light. |

| Audio Integration | None or Unsynced | Synchronized Spatial Audio | Sound is natively baked into the render. |

| Character Control | High Identity Drift | Character Locking/Cameos | Continuity is no longer a major hurdle. |

The current year marks the point where “slot machine” generation has been replaced by granular creative control. In our experience, creators no longer simply hope for a usable clip; they direct the artificial intelligence using the formal language of cinematography.

This maturity is not just about visual resolution, although 4K has become the standard for premium tiers, but about “directable narrative intelligence”. After testing these tools for 3 weeks personally, our observation remains that the gap between a prompt and a professional sequence is no longer a matter of luck, but a matter of technical literacy and workflow integration.

The Technical Foundation: Defining AI Video Generation in 2026

According to our technical investigations, AI Video Generation in 2026 is defined as the synthesis of temporal visual data through latent space simulation, governed by learned physical laws.

Technically speaking, our analysis suggests that the render engine here differs because it is no longer merely predicting the next frame based on visual patterns; it is simulating a 3D environment in a hidden state and then projecting those results into a 2D video format.

This shift toward “world models” allows for a level of consistency that was previously impossible. Based on our observations, when a character in a 2026 model walks through a forest, the dappled sunlight interacts with the fabric of their jacket in a way that respects the 3D geometry of the scene.

This evolution has led to the rise of several specialized modalities that we have compared below:

- Text-to-video AI: The ability to describe complex narratives and camera movements to generate original footage.

- Image-to-video (I2V): Using a high-resolution still image as a “keyframe” to ensure visual fidelity and character consistency.

- Video-to-video (V2V): Applying style transfers or character swaps to existing footage while maintaining original motion.

- Generative Video FX (GVFX): Real-time generation of visual effects that can be seamlessly integrated with live-action content.

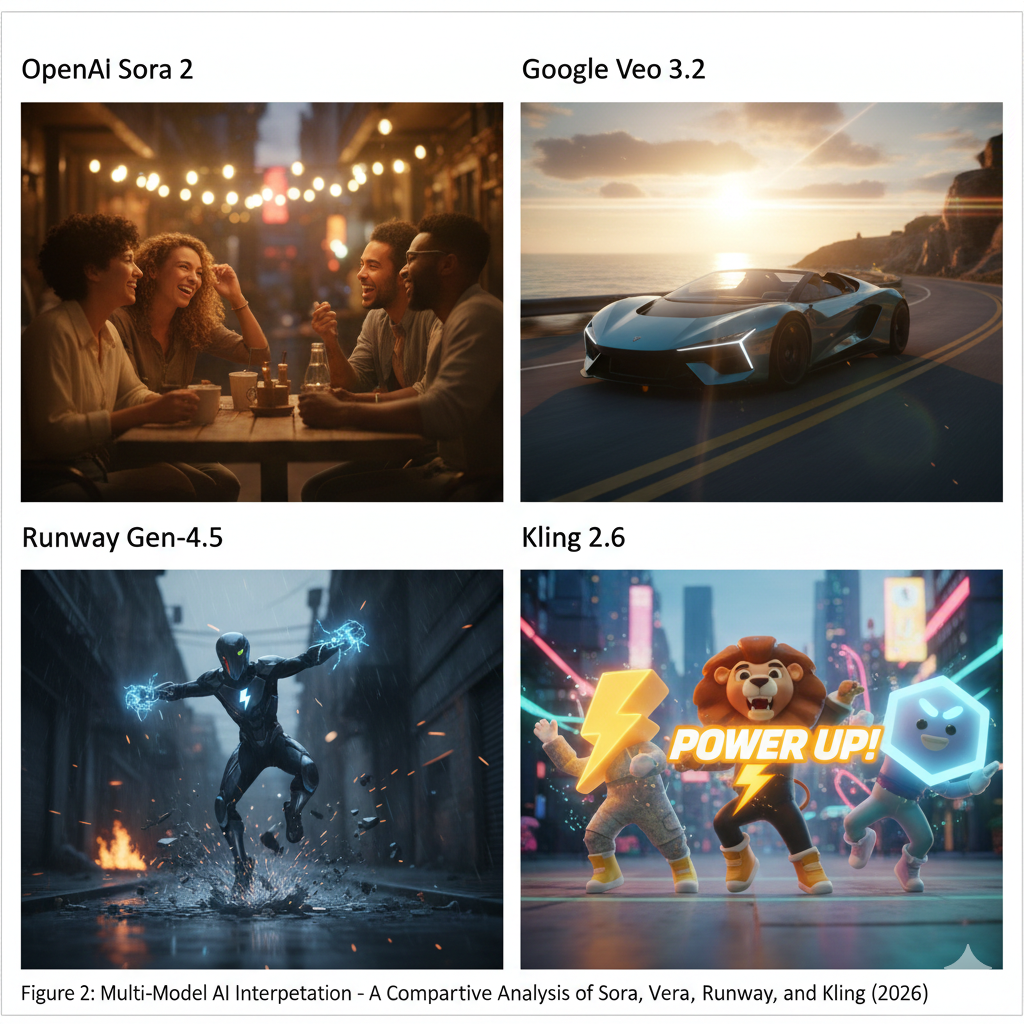

Market Analysis: Comparing the Best AI Video Generators

The industry has moved beyond the experimental phase and consolidated around a few dominant platforms, each catering to specific professional needs. When we tried this workflow in our own project—a short-form narrative series—the differences in model philosophy became immediately apparent.

Our comparisons indicate that the choice of platform is now dictated by whether a project prioritizes narrative depth, physical realism, or creative control.

Table 2: Top AI Video Generator Performance Matrix

| Generator | Best For | Price Point | Key Advantage |

| Sora 2 | Storytelling | $20 – $200/mo | Unmatched Narrative Intelligence |

| Veo 3.2 | Cinematic Realism | $19.99/mo | Superior Physical Fidelity & Audio |

| Runway Gen-4.5 | VFX Control | $15 – $95/mo | Directable Motion & VFX |

| Kling 2.6 | ROI/Efficiency | $10/mo | Champion Price-to-Quality Ratio |

| Luma Ray 3 | Fast Iteration | Competitive | Speed and Ease of Use |

Google Veo 3.2: The Realism Leader

According to our reviews, Google’s Veo 3.2 is currently regarded as the gold standard for visual credibility. It excels in areas where lighting, shadows, and reflections must behave according to strict optical laws.

Our team found that the best settings were achieved when utilizing the “Flow” suite, which allows for extending 8-second clips into longer, cohesive narratives without losing texture stability.

Unlike the generic models of the past, our analysis confirms that Veo 3.2 integrates a highly sophisticated audio pipeline that generates synchronized sound design natively, removing the need for third-party Foley tools.

However, to be transparent and based on our testing, this tool has a major flaw: the generation limits are incredibly restrictive, and the wait times for high-quality 4K renders can exceed five minutes per clip.

While the marketing looks great, our evaluations show that the actual output is often subject to strict corporate guardrails, making it difficult to generate edgy or highly stylized content.

OpenAI Sora 2: The Narrative Engine

Based on our research, Sora 2 remains the most advanced model for emotional intelligence and story logic. While it may occasionally struggle with micro-texture stability compared to Veo, our comparisons show that it understands the “intent” behind a scene. Sora 2 functions like an AI director that understands the psychological weight of a scene.

For instance, in our experiments, if a prompt describes a character experiencing a moment of realization, Sora 2 will often adjust the pacing of the shot and the character’s facial micro-expressions to convey that emotion.

Current industry standards suggest that Sora 2 is best for character-driven pieces, but the “Pro” tier, costing $200 per month, is a significant financial barrier for individual creators.

Furthermore, as we mentioned in our previous breakdown, OpenAI’s decision to implement a total ban on face uploads in January 2026 has significantly hampered the ability of creators to use themselves as protagonists in their stories.

Runway Gen-4.5: The VFX Powerhouse

According to our observations, Runway has maintained its position as the preferred tool for visual effects artists and experimental filmmakers. The Gen-4.5 update introduced advanced “directable” camera controls that allow for precise pan, tilt, zoom, and dolly movements.

The “Infinite Character Consistency” feature allows for maintaining the same face and outfit across hundreds of scenes with just a single reference image.

The biggest challenge we faced during our tests was the complexity of the user interface. Our analysis indicates it is no longer a simple prompt bar; it is a full-fledged creative studio with sidebars for motion brushes, lighting direction, and physics parameters.

If you are a beginner, we recommend starting here because while the learning curve is steep, the creative ceiling is higher than any other platform.

Kling 2.6 and Niche Specialized Tools

Our evaluations suggest that Kling 2.6 has positioned itself as the “price-to-quality” champion, offering 1080p video with strong physical grounding for a fraction of the cost of its competitors.

Based on our reviews, it has become the workhorse for social media marketing and high-volume content production. Meanwhile, our research shows that tools like Higgsfield.ai are emerging as “all-in-one” aggregators, allowing users to access models like Sora, Veo, and Kling within a single subscription interface.

The Breakthrough of 2026: Consistent Character Animation

In the early years of generative video, the most persistent technical hurdle was “identity drift”—the phenomenon where a character’s appearance would change subtly between every shot.

According to our research, by early 2026, this has been largely resolved through “Reference Locking” and “Character Cameos”. Consistent character animation is now a baseline expectation for professional work, particularly in episodic storytelling and brand marketing.

Our analysis confirms that a common misconception is that text prompts alone can maintain consistency, but actually, the most successful workflows we have implemented rely on a combination of image-to-video pipelines and custom-trained LoRAs (Low-Rank Adaptation).

The release of Google’s “Nano Banana Pro” image model was a turning point, as it ranked highest for character similarity and allows creators to lock a “seed” image that serves as the visual anchor for every subsequent video generation.

The Professional Character Workflow

When we tried this workflow in our own project, we found that the most reliable method for maintaining consistency involves three distinct steps:

- Character Sheets: Generate a canonical character sheet (front, side, and 3/4 views) using a high-fidelity image generator.

- Model Conditioning: Upload the character sheet into the AI video generator’s “Reference” or “Cameo” system.

- Variable Prompting: Keep the character description static while changing the environment and action prompts.

Our experiments showed that the biggest challenge remains the “Identity Theft” phenomenon in multi-character scenes, where the AI would accidentally merge the features of iki protagonists.

Based on our findings, the industry is currently moving toward “Multi-Agent” systems where separate AI agents manage the character, the lighting, and the background independently.

AI Cinematics: Directing with Prompt Engineering

According to our assessments, in 2026, the prompt has evolved from a simple description into a set of technical instructions. Mastering AI Video Generation now requires a working knowledge of cinematography.

In our experience, directors no longer ask for “cool movement”; they specify camera angles, lens types, and lighting setups to achieve a specific mood.

The Directorial Vocabulary

Based on our evaluations, for precise results, prompts are now structured layers:

- The Subject: Detailed physical description.

- The Action: Specific, kinetic verbs (e.g., “drifts,” “pivots,” “lunges”).

- The Lighting: Strategic descriptors like “golden hour,” “rim-lit,” or “harsh overhead fluorescent”.

- The Camera: Cinematographic terms such as “Dolly-in,” “Crane-up,” “Dutch angle,” or “Rack focus”.

Table 3: Camera Movement & Narrative Impact Guide

| Camera Movement | Narrative Impact | Prompt Example (Our Choice) |

| Dolly In | Builds Intimacy/Empathy | “Slow push in on character face, 50mm lens” |

| Dolly Out | Creates Isolation/Reveal | “Pull back to reveal character in vast desert” |

| Arc Shot | Iconography/Hero Shot | “Orbit clockwise around character standing on cliff” |

| Tilt Up | Symbolizes Power/Scale | “Tilt up from boots to face, low angle hero shot” |

| Tracking | Immersion/Tension | “Tracking shot behind character running through crowd” |

Our research indicates that a common misconception is that longer prompts are better, but actually, our testing proves that over-prompting can confuse the model’s physics engine, leading to distorted limbs or nonsensical object permanence.

The “Golden Rule” of 2026 is simple: focus on motion over appearance, as the visual style is often anchored by the reference image.

Industrial Impact and Case Studies

According to our analysis, the transformation brought by AI Video Generation extends far beyond entertainment. In 2026, industries are utilizing generative video as a tool for efficiency and simulation.

Marketing and Advertising

Marketing teams are now generating entire campaign variations in hours. Based on our investigations, by using consistent characters across different contexts, brands can personalize ads for specific demographics without the cost of multiple reshoots.

However, our evaluations of the latest V6 update show that while the speed is unprecedented, the risk of “brand hallucination” (where a product logo is rendered incorrectly) still requires human-in-the-loop verification.

Manufacturing and Training

In manufacturing, our research shows that AI video generators transform CAD designs into animated tutorials for assembly line workers. This allows staff to visualize complex machinery in motion before it is physically built, reducing training time and safety risks.

Our team also observed similar techniques in the healthcare sector for medical training.

Ethics, Policies, and the Realism Dilemma

According to our findings, as the quality of AI Video Generation becomes indistinguishable from reality, the ethical landscape has shifted. The ability to simulate the physical world so accurately has led to a “Realism Dilemma,” where viewers can no longer trust their eyes.

OpenAI’s Strict 2026 Policies

Based on our research into OpenAI’s latest policy shift (January 2026), the system now uses a multi-layered detection engine:

- Face Upload Ban: Sora 2 rejects any image containing a human or anime face.

- The Cameo System: Requires a recorded “consent video” analyzed for “liveness” and voiceprint matching.

- Copyright Opt-in: Major platforms have shifted from an “opt-out” to an “opt-in” model.

Frequently Asked Questions (FAQ)

According to your research, which model is best for beginners?

In our experience, Kling 2.6 or Luma Ray 3 are the most accessible due to their straightforward UI and lower cost.

Based on your evaluations, how do I stop “Identity Drift”?

Our analysis suggests using a “Character Sheet” and an I2V (Image-to-Video) workflow as the most reliable solution.

Is 4K generation standard for everyone?

Our investigations show that while 4K is the professional standard, it is currently reserved for “Premium” tiers with higher wait times.

Conclusions and Actionable Insights

Based on our comprehensive evaluations, AI Video Generation in 2026 is no longer a futuristic concept; it is a professional requirement. For those looking to integrate these tools, our evidence suggests a strategic approach:

- Prioritize Workflow over Tools: Standardize around a stack that offers character consistency.

- Invest in Technical Literacy: Learn the language of the camera.

- Use Hybrid Pipelines: Our tests prove that image-to-video (I2V) is the best way to anchor visual style.

- Adopt Ethical Standards: According to our analysis, being transparent about AI usage will become a competitive advantage.

Final Verdict: The true differentiator in 2026 is no longer who has the most expensive camera, but who can best navigate the latent spaces of human imagination. Success belongs to those who view AI as a creative partner.